AI and I

A few weeks ago, I wrote Designing Online Spaces that Everyone Can Navigate. Or more accurately, I generated the article, using OpenAI’s ChatGPT 3.5.

A few weeks ago, I wrote Designing Online Spaces that Everyone Can Navigate. Or more accurately, I generated the article, using OpenAI’s ChatGPT 3.5. I did this as an experiment as I was curious to find how the tool worked and wondered if its output could fool my team members. It did. Sorta. Here’s how it went:

First, I fed the ask into ChatGPT

What does it actually look like to do accessibility online? Equally important, what does it not look like? There are a lot of times when organizations think of accessibility as an afterthought and do a half-ass job at creating an accessible experience because they’re trying to check a social justice box. This is an opportunity to frame up how to think of it at the beginning of a project rather than the end. 700 words

ChatGPT instantly generated an article, stopping mid-paragraph, presumably due to the word count limit. I then prompted the tool to finish the paragraph and it did so.

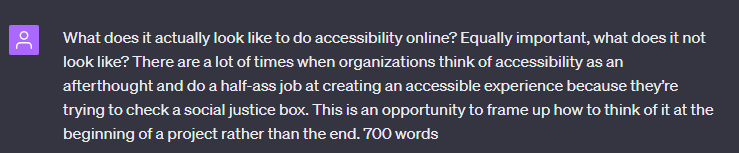

I also asked for article title suggestions and suitable clip-art options and was given five exampleseach, but ultimately used OpenAI’s DALL-E to generate a hero image.

After googling to make sure the generated paragraphs weren’t simply lifted from some other article, I passed along this draft to my colleague who edits our newsletter. Here’s what she had to say:

What did you think of the first draft?

I was perplexed. Conveniently you left out the fact that you AI generated this article when you sent it to me, so I was kind of in shock when I got to the fourth or fifth paragraph and realized it was a verbatim duplicate of a previous paragraph.

Ha ha, ChatGPT repeated an earlier sentence in the concluding paragraph. I initially saw this as core statement reinforcement, but now wonder if adding a word count target made ChatGPT pad out the article?

Did it take longer than usual for you to edit the copy?

Absolutely. I spent at least 50% more time editing than normally. The biggest challenge was getting the copy to a place where it was at least somewhat skimmable because it’s a newsletter. We want people to be able to at least get the big takeaways if they do decide to skim.

The next challenge was getting the information shared in the piece to not be so baseline, but that would’ve required completely rewriting the article. For example, something like “provide alt-text for images” is standard practice for us. So, I ended up reframing the article to specifically call out in the beginning that the article was for people who were completely unfamiliar with digital accessibility so as not to accidentally insult our audience.

I feel like the generated article was very close to what I would’ve written, if not in my voice, so some of the extra editing time here might’ve been a wash?

Production notes:

OpenAI offers a tool for detecting ai generated content. On the original draft the tool classified the article as likely to have been ai-generated. After Thamarrah’s final edits the tool classifies the final version as very unlikely to have been ai-generated.

OpenAI’s DALL-E image generator understands text input but somehow fails to generate readable text in image output. My initial prompt was “A computer keyboard where one of the keys reads ‘Accessibility'” and the result was a set of images of keyboards with alien-looking glyphs and odd approximations of the target word. (see the hero image above)

Takeaways:

Editor: Right now, ChatGPT is at a place where you’re going to need more than just one prompt to get a result that is more than just the most generic information about a given topic. Personally, the amount of time you’re going to need to spend with it to get there would be better used on just writing a quality article yourself.

Lewis: As someone who’s not an avid writer, I can write, but it’s often a painful exercise taking hours. For a large language model (LLM) like ChatGPT it can take seconds. However, there are problems with that. One being an LLM will flat out (and convincingly) lie to you. The industry refers to this as “hallucinations.” Some even generate faux reference links to support hallucinated assertions. There are also ethical concerns: Am I as an LLM user the author of a generated article, or is the writer of the prompt (in this case the editor), or is it the LLM itself?