Images, Ideas, and AI

In design and marketing, we are constantly using images of people to represent ideas and stand in for concepts. Enter: AI-generated images. Now, images that are indistinguishable from photographs can be created out of thin air without any sort of reality to back them up.

There is no cure for illusion. There is only the opportunity for discovery.

― Daniel J. Boorstin, The Image: A Guide to Pseudo-Events in America

In design and marketing, we are constantly using images of people to represent ideas and stand in for concepts. Actual people, having real experiences, performing real labor, or attending actual events, have their images taken and used to represent ideas later on. For example, on a web page, we might use a photograph of a group of volunteers to represent an organization’s entire volunteer program, or to represent a more abstract concept like “community” “duty” or “solidarity.” Sometimes, when there are no actual images of events, work, etc, we turn to stock imagery instead. This is not done without people’s permission: the law requires that people appearing in these images sign some sort of waiver giving the organization (or seller) the right to use their likeness in this way.

This isn’t inherently good or bad, it’s just one way that images are deployed in our culture today. Real people having real human experiences are flattened into pictures and recontextualized to communicate an idea, which often has very little to do with the person in the photograph.

Things obviously start to get weird when an organization has an idea about themselves that they want to be true, or at least to be perceived as true by an audience, and actively produce images to create that truth.

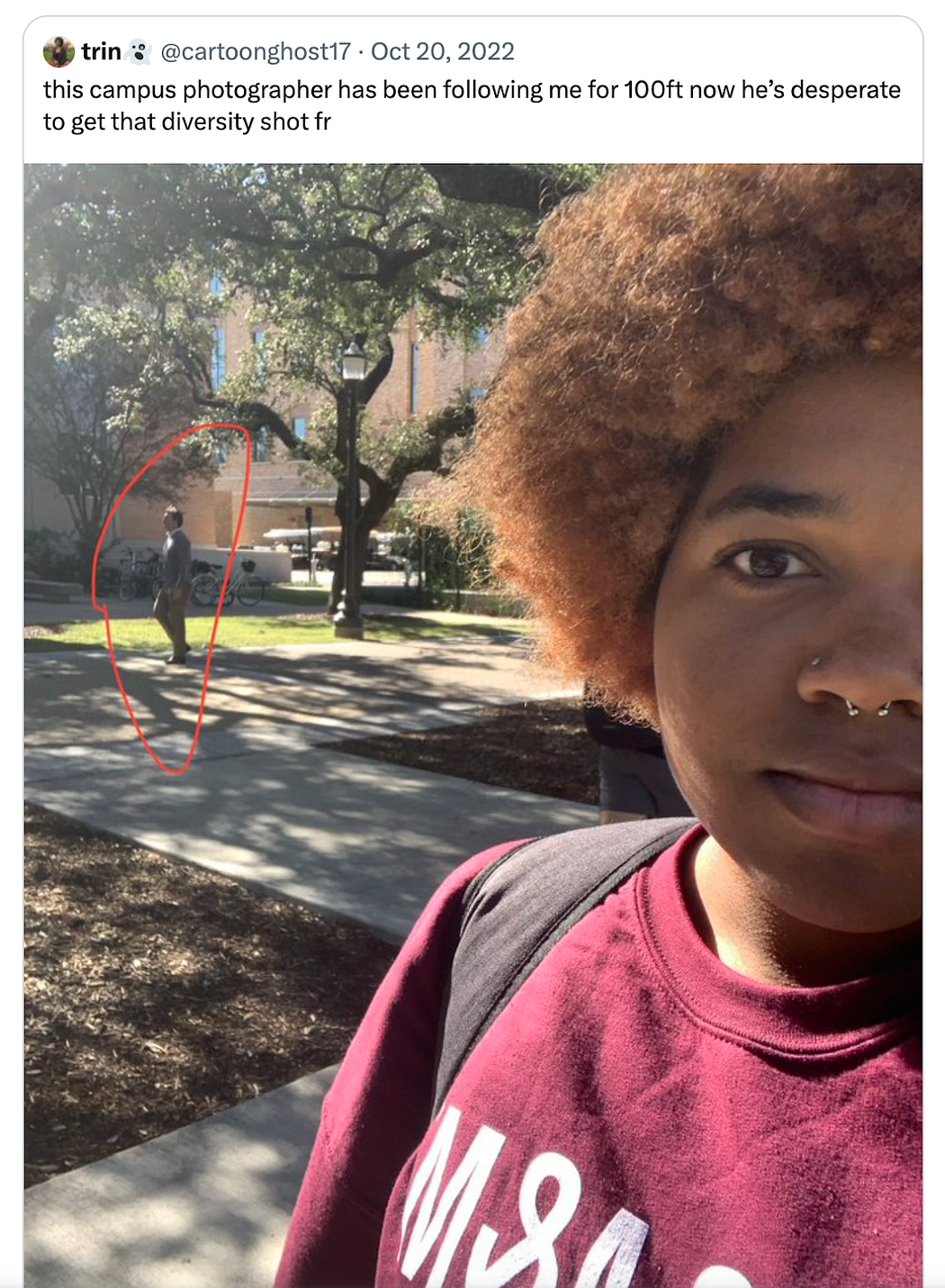

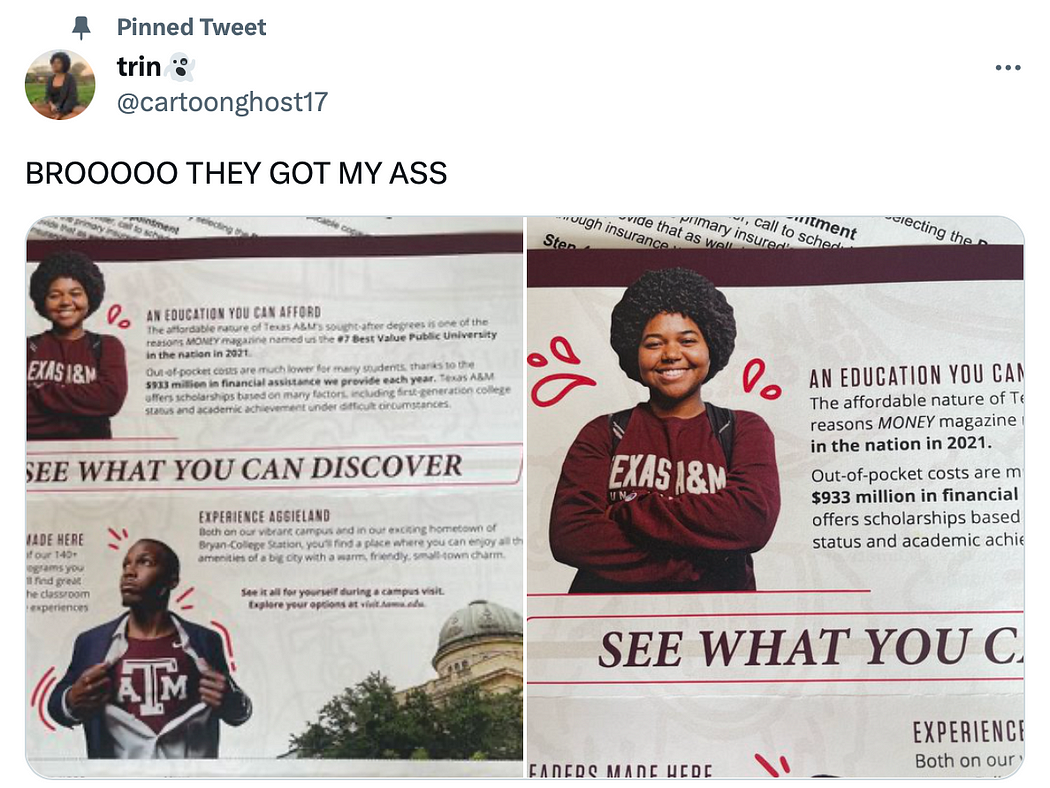

Take these two tweets. Apart from just being an objectively funny shot/chaser gently roasting their school, it also shines a light on the economy of image production in design and marketing. This person was already aware of the way their school wanted to be perceived, and the types of images their school wanted to create to reinforce that perception. When they noticed the photographer, they knew that whatever image the school produced of them was going to be deployed in a certain way, and sure enough it was.

Enter: AI-generated images. Now, images that are indistinguishable from photographs can be created out of thin air without any sort of reality to back them up. They have the ability to create a perception of an organization that might be completely untrue — or as some might say, “aspirational”, not yet true — without any sort of grounding in a real event or photoshoot with real people.

Artificial diversity

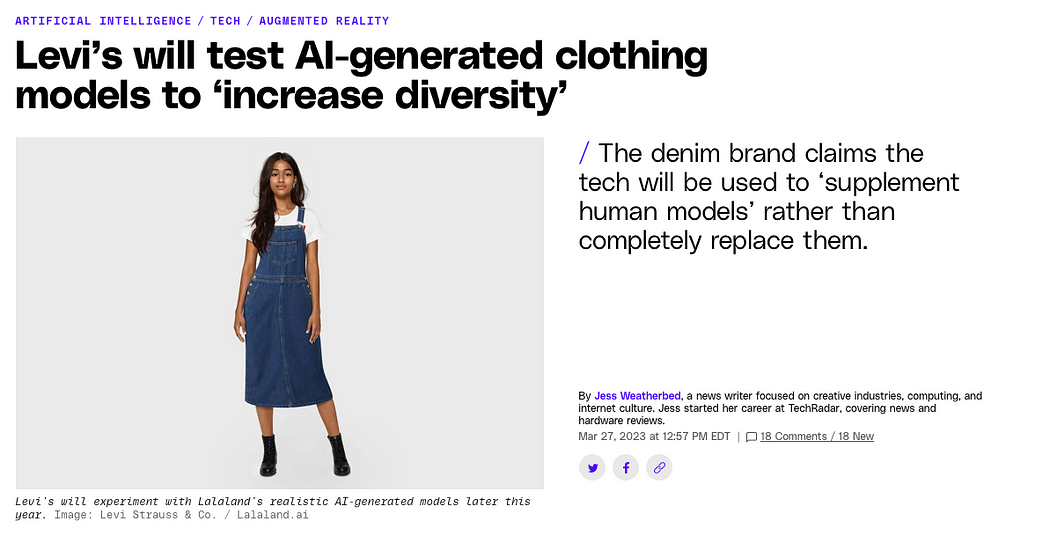

Levi’s wants to be seen as a company that makes clothes for people of color and people with different body types, thereby accessing new markets, making more money, and being perceived as a more inclusive brand. Now, with the power of AI they can show lots of different people wearing their clothing, without the overhead and cost associated with actually finding living human beings to pay to wear that clothing for photographs — let alone actually choose to purchase and wear said clothing in their real lives.

So where is all this headed? Prior to the advent of AI, images have done a great deal of heavy lifting for organizations seeking to cast themselves in a certain light. With a well-curated set of images, it is possible to reinforce or shift the way your organization is perceived by a mass audience. Because of this, images have a great deal of value, and vast resources get poured into creating images meant to convey ideas.

(De)valuing images and trusting what we see

But with AI, I believe we are about to experience a profound reset in our relationship with images, and a crash in the “value” of images in our culture. Sure, it’s already possible to so completely separate an image from its original context that almost any meaning can be ascribed to it. But when it’s possible for anyone to dream up any image with any person, completely devoid of any original context, with no production overhead beyond a simple text prompt, all images that we encounter in the world will become suspect.

Back in May of this year, much ink was spilled over Amnesty International’s use of AI-generated images to depict protests in Columbia in a promotion for a report on the subject. The organization was criticized for, as one Twitter user quoted in The Guardian put it, “blurring the line between fact and fiction” and “devaluing the work of photographers and reporters.” Amnesty, for their part, released a statement saying that they chose to use the images to protect the identity of protesters. Promoting your report on very real state violence with images that are created by a computer algorithm is obviously an unforced error and the perfect way to call all your reporting into question.

However, I think the discourse around Amnesty’s use of AI — as opposed to real photography — highlights the way we privilege photography of an event as some kind of sacred, universal truth when real photos of real happenings can easily be used in bad faith. A single well-composed photo of a police officer hugging a protester for example can be deployed to call into question people’s accounts of actual police misconduct during a protest.

As a culture, we have been trained to rely on images as a shorthand for what is real. In our media-saturated reality, they are among our most potent and primary ways of understanding the world around us. The time is fast approaching that will no longer be the case.

Is this “real life?”

We are at an inflection point. Fabricated images created in Photoshop (not to mention real photos used in bad faith) have existed in the media for many years, but now the resources it takes to create a convincing image out of thin air are dropping to zero. As a result, we will soon find ourselves in a media landscape flooded beyond all reckoning with fake images.

Reactionary politics thrives when people don’t trust each other, and you can be sure that these elements in American capitalist life will use AI images and the confusion surrounding them to drive people further apart. But rather than surrendering to it, we need to use this moment to actively cultivate in ourselves and those around us a greater media literacy. “Real” or not, we don’t have to take an emotionally charged image at face value, and instantly share that image with our networks. We can instead learn to ask ourselves where this image — ”real” or not — came from, whether it tells the whole story, who has placed it in front of us, and who stands to gain from the impression it creates. Free from the primacy of images, we can seek out truth — real, lived, spoken truth — in each other, in all those people that images claim to depict.